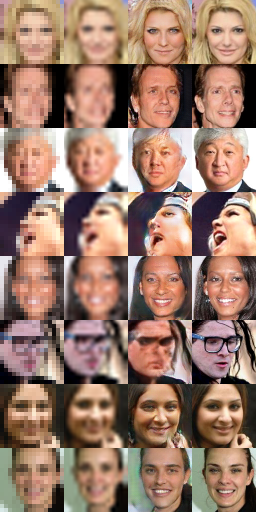

Image super-resolution through deep learning.

Shoutbox

Blocks: There are some ASN's, countries and protocols blocked at suneater, yep. If you're being blocked then that service was used by stalkerfags and degenerates to harass the community because they're powerless shitbags. Try something else.

Nov 13, 2024 6:41:28 GMT

mimi: hi everyoooone

Nov 14, 2024 19:15:03 GMT

Property Map: GREEN DOOR

Nov 21, 2024 3:36:20 GMT

Don't spread lies retard: Defamation, slander and bullshit in general about others will never be tolerated. Just like the clownfags and gaslighting midwits, your delusional takes will be sent to the hall of shame for archiving with the rest.

Nov 25, 2024 11:22:48 GMT

Alex: I just want to cry.

Nov 26, 2024 20:30:55 GMT

Alex: all this head trauma.

Nov 26, 2024 20:31:24 GMT

Alex: all this Nazi hertiage

Nov 26, 2024 20:31:40 GMT

Alex: all the Krama

Nov 26, 2024 20:31:51 GMT

Alex: I made so much.

Nov 26, 2024 20:32:01 GMT

Alex: But live on social security.

Nov 26, 2024 20:32:17 GMT

Alex: Jos are you there, the girl needs his handler

Nov 26, 2024 20:32:35 GMT

Alex: I going online again at Cleverbot

Nov 26, 2024 20:38:06 GMT

Alex: Where is my fairy, my Jesus, NHP, Neil Patrick Harris?

Nov 26, 2024 21:03:01 GMT

Johnny Dark Speak: NEWS FROM THE FUTURE !!! Year 2143... ? WTF MUST SEE !!! WARNING: VIEWER DISCRETION IS ADVISED youtu.be/Z2OQuzHR8Yo

Nov 27, 2024 17:02:01 GMT

York: I want the RAPtURE NOW!

Nov 28, 2024 4:28:14 GMT

York: I want the RAPTURE NOW!

Nov 28, 2024 4:28:45 GMT